Load balancing is a critical component of modern IT infrastructure, designed to efficiently distribute incoming network traffic across multiple servers. This process optimizes resource utilization, enhances system performance, and ensures high availability for applications and websites. Understanding how load balancing works is crucial for maintaining a scalable and reliable network.

- What is Load Balancing?

- How Load Balancing Works:

- Key Benefits of load balancing

- Load balancing algorithms

What is Load Balancing?

Load balancing is a technique that distributes incoming network traffic or computing workload across multiple servers or resources. Also ensures no single server is overwhelmed, thereby preventing performance degradation and ensuring fault tolerance. It is commonly used in web servers, database servers, and other critical systems to enhance responsiveness and availability.

How Load Balancing Works:

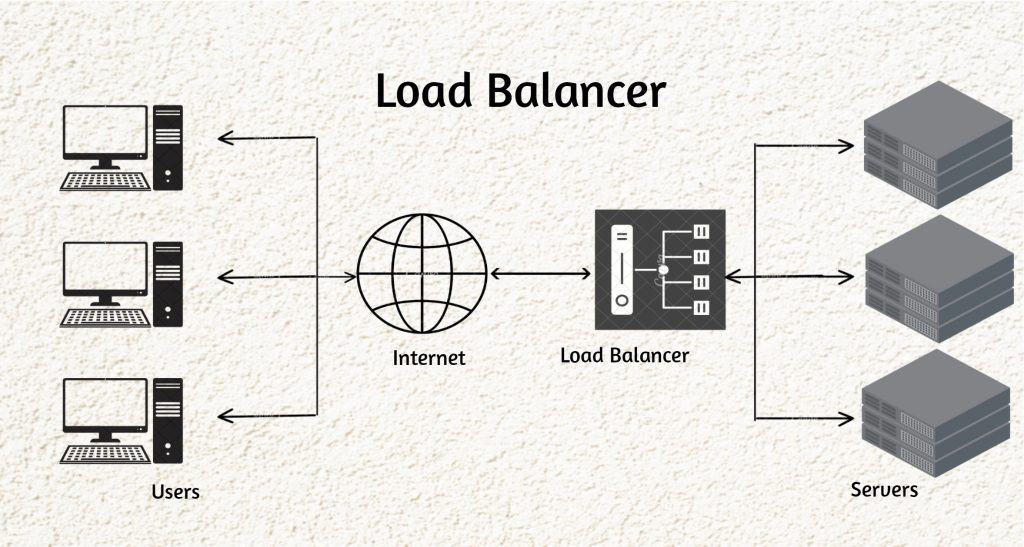

Load balancers operate based on various algorithms and methods to distribute traffic evenly across servers. Here’s an overview of the typical process:

- Client Sends a Request: A client initiates a request, such as accessing a website or a web application.

- Request Reaches the Load Balancer: The incoming request reaches the load balancer, which acts as a traffic cop, managing the distribution of requests.

- Load Balancer Determines Server: Using predefined algorithms (e.g., Round Robin, Least Connections, Weighted Distribution), the load balancer selects an appropriate server from the pool to handle the request.

- Request Forwarded to the Selected Server: The load balancer forwards the client’s request to the chosen server in the backend.

- Server Processes the Request: The selected server processes the request, performs necessary computations, and sends the response back to the load balancer.

- Load Balancer Sends Response to Client: The load balancer receives the response from the server and forwards it to the client, completing the communication cycle.

Key Benefits of Load Balancing:

Scalability:

- Load balancing facilitates the scaling of applications by efficiently distributing traffic across multiple servers, accommodating increased user demand.

High Availability:

- Redundancy through multiple servers ensures that if one server fails, others can seamlessly handle the traffic, minimizing downtime.

Optimized Resource Utilization:

- Efficient distribution of workloads prevents individual servers from becoming overloaded, leading to better resource utilization.

Improved Performance:

- It enhances response times. Also ensures a smoother user experience by distributing traffic to servers that are best suited to handle specific requests.

Load balancing algorithms

Load balancing algorithms play a crucial role in efficiently distributing incoming network traffic across multiple servers or resources. This ensures optimal performance, fault tolerance, and resource utilization. The choice of this algorithm depends on factors such as the application’s characteristics, server capacities, and desired outcomes. Here’s an overview of the use of load balancing algorithms and their types:

Static Load Balancing: Static Load Balancing is a method where the distribution of tasks or requests among servers is predetermined and remains constant, regardless of the current system conditions. It involves a fixed assignment of workloads to servers, offering simplicity but potentially leading to uneven resource utilization. Examples include Round Robin and Weighted Distribution algorithms.

Dynamic Load Balancing Algorithm: Dynamic Load Balancing Algorithms are adaptive mechanisms that continuously assess the real-time conditions of servers and distribute workloads based on factors like server health, response times, and current workloads. These algorithms dynamically adjust the load distribution to optimize performance and resource utilization in changing environments. Examples include Least Connections and Dynamic Weight Adjustment algorithms, which adapt to changing workloads and server states.

Round Robin :

Round Robin is a straightforward and widely-used static load balancing algorithm that evenly distributes incoming requests across a rotating sequence of servers. The algorithm works in a circular manner, sequentially sending each new request to the next server in line. This ensures a balanced distribution of the workload among all servers, promoting fair resource utilization. Round Robin is easy to implement, requiring minimal configuration, making it suitable for environments where servers have similar capacities. While it lacks the ability to consider server load or performance, its simplicity and equal distribution make it an effective choice in scenarios where a basic and uncomplicated load balancing approach is sufficient.

Weighted Distribution:

Weighted Distribution is a static load balancing algorithm designed to address scenarios where servers in a cluster possess varying capacities or capabilities. In this approach, each server is assigned a weight or priority value based on factors such as processing power, available resources, or overall performance. The algorithm then allocates incoming requests proportionally to the assigned weights, allowing administrators to prioritize certain servers over others. This fine-tuned control enables optimization of resource utilization in heterogeneous server environments. Weighted Distribution is particularly valuable when seeking to avoid overloading more powerful servers or when specific servers need to handle a larger share of the workload. While providing flexibility in load distribution, this algorithm requires careful consideration of server characteristics to assign appropriate weights effectively.

IP Hash:

IP Hash is a load balancing algorithm that ensures session persistence by consistently routing a particular client’s requests to the same server. This is achieved by hashing the client’s IP address and using the resulting hash value to determine the destination server. By doing so, IP Hash guarantees that a user interacting with a web application or service maintains continuity with a specific server throughout their session. This approach is especially valuable in scenarios where maintaining session state, such as in e-commerce applications or user-specific content delivery, is crucial. While promoting session persistence, IP Hash may introduce challenges in dynamically scaling server clusters and might not be suitable for every load balancing scenario. Careful consideration of application requirements and potential limitations is essential when opting for IP Hash load balancing.

Least Connections:

This is a dynamic load balancing strategy that directs incoming traffic to the server with the fewest active connections. This approach aims to distribute the load more evenly by sending new requests to the server currently handling the least amount of traffic. Least Connections dynamically adapts to real-time server workloads, preventing uneven resource use and potential server overload. This benefits environments with varying server capacities, ensuring efficient distribution based on real-time server loads. While effective in maintaining balance, Least Connections requires monitoring of server states and may introduce overhead in tracking connection counts. It is a valuable choice for optimizing resource utilization and ensuring responsive and scalable applications.

Least Response Time:

Least Response Time Load Balancing:

In the realm of dynamic load balancing, the “Least Response Time” algorithm stands out as a strategy that intelligently directs incoming traffic to the server exhibiting the shortest response time. By prioritizing servers based on their real-time responsiveness, this algorithm ensures efficient resource utilization and an enhanced user experience. When a new request is received, the Least Response Time algorithm dynamically evaluates server performance and routes the request to the server with the quickest response, minimizing latency and optimizing overall system responsiveness. This approach is particularly advantageous in environments where server workloads fluctuate, as it enables adaptive load distribution. However, it necessitates continuous monitoring of server response times and may introduce complexities in determining the optimal server in real-time. Nonetheless, the emphasis on minimizing response times makes Least Response Time a strategic choice for applications prioritizing speed and efficiency.

Adaptive

n the dynamic landscape of load balancing, the Adaptive algorithm emerges as a sophisticated strategy designed to intelligently respond to changing network conditions and server workloads. This algorithm leverages predictive modeling and historical data to anticipate future demands and proactively adjust the distribution of incoming traffic. By dynamically adapting to evolving patterns, Adaptive Load Balancing optimizes resource allocation, ensuring efficient utilization of server capacities. This approach is particularly effective in environments with predictable traffic variations, allowing the system to preemptively distribute workloads based on anticipated demands. While offering enhanced responsiveness, Adaptive Load Balancing requires careful calibration and regular updates to its predictive models. Nevertheless, its ability to foresee and adapt to changing workloads positions it as a strategic choice for environments where anticipating traffic patterns is crucial for maintaining optimal performance.

Conclusion

In conclusion, load balancing is a crucial technique in modern network and application infrastructure, essential for ensuring high availability, reliability, and efficient performance. By effectively distributing network or application traffic across multiple servers, load balancers prevent any single server from becoming a bottleneck, thereby enhancing the user experience and optimizing resource utilization. Whether implemented via hardware, software, or as a service, load balancing can be tailored to suit various architectures and requirements, from small-scale applications to large, distributed environments. As technology continues to evolve, so too will load balancing strategies, adapting to new challenges such as cloud computing, containerization, and the ever-increasing demand for scalable, resilient online services. Understanding and effectively implementing load balancing is key for any organization looking to maintain a robust, high-performance online presence.